Getting Started with Gemma Tokenizer including Multilingual Testing

See Also

- Part 1 - Quick Start with Gemma (To be published)

- Part 2 - Getting Started with Gemma Tokenizer including Multilingual Testing

- Part 3 - Quick Start to Gemma LoRA Fine-Tuning

- Part 4 - Quick Start to Gemma Korean LoRA Fine-Tuning

- Part 5 - Quick Start to Gemma English-Korean Translation LoRA Fine-Tuning

- Part 6 - Quick Start to Gemma Korean-English Translation LoRA Fine-Tuning

- Part 7 - Quick Start to Gemma Korean SQL Chatbot LoRA Fine-Tuning

Below is an introductory guide for developers on how to get started with the Gemma Tokenizer using the keras-nlp library. It covers installation, configuration, and basic usage examples across multiple languages to demonstrate the versatility of the Gemma Tokenizer.

View on AIFactory » https://aifactory.space/page/keraskorea/forum/discussion/854

Installation

First, ensure that you have the latest versions of the necessary libraries. This includes installing keras-nlp for NLP utilities and updating keras to its latest version to ensure compatibility.

# Install the required keras-nlp library

!pip install -q -U keras-nlp

# Update keras to the latest version

!pip install -q -U keras>=3

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m465.2/465.2 kB[0m [31m8.9 MB/s[0m eta [36m0:00:00[0m

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m950.8/950.8 kB[0m [31m15.1 MB/s[0m eta [36m0:00:00[0m

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m5.2/5.2 MB[0m [31m30.6 MB/s[0m eta [36m0:00:00[0m

[?25h[31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

tensorflow 2.15.0 requires keras<2.16,>=2.15.0, but you have keras 3.0.5 which is incompatible.[0m[31m

[0m

Configuration

Before using the Gemma Tokenizer, you need to configure your environment. This involves setting up Kaggle API credentials (if you’re planning to use datasets or models hosted on Kaggle) and specifying the backend for Keras. In this guide, we’re using JAX for its performance benefits.

Setting Up Kaggle API Credentials

# Import necessary libraries for environment setup

import os

from google.colab import userdata

# Set Kaggle API credentials in the environment variables

os.environ["KAGGLE_USERNAME"] = userdata.get('KAGGLE_USERNAME')

os.environ["KAGGLE_KEY"] = userdata.get('KAGGLE_KEY')

Specifying the Backend

# Set the KERAS_BACKEND to 'jax'. You can also use 'torch' or 'tensorflow'.

os.environ["KERAS_BACKEND"] = "jax" # JAX backend is preferred for its performance.

# Avoid memory fragmentation when using the JAX backend.

os.environ["XLA_PYTHON_CLIENT_MEM_FRACTION"]="1.00"

Initialization

Now, let’s import the necessary libraries and initialize the Gemma Tokenizer with a preset configuration. This example uses an English model, but Gemma supports multiple languages.

# Import keras and keras_nlp for tokenizer functionality

import keras

import keras_nlp

# Initialize the GemmaTokenizer with a preset configuration

tokenizer = keras_nlp.models.GemmaTokenizer.from_preset("gemma_2b_en")

Attaching 'tokenizer.json' from model 'keras/gemma/keras/gemma_2b_en/2' to your Colab notebook...

Attaching 'tokenizer.json' from model 'keras/gemma/keras/gemma_2b_en/2' to your Colab notebook...

Attaching 'assets/tokenizer/vocabulary.spm' from model 'keras/gemma/keras/gemma_2b_en/2' to your Colab notebook...

[ 4521 235269 15674 235265]

tf.Tensor(b'Hello, Korea.', shape=(), dtype=string)

Basic Usage

Here’s how to tokenize and detokenize a sample text. Additionally, we’ll define a function to convert token IDs to strings and print tokens with sequential colors for better visualization.

Tokenizing and Detokenizing Text

tokens = tokenizer("Hello, Korea! I'm Gemma.")

print(tokens)

strings = tokenizer.detokenize(tokens)

print(strings)

[ 4521 235269 15674 235341 590 235303 235262 137061 235265]

tf.Tensor(b"Hello, Korea! I'm Gemma.", shape=(), dtype=string)

Visualizing Tokens with Colors

We define a helper function to apply colors to tokens sequentially and print them. This enhances readability and helps in analyzing the tokenization output visually.

# Import itertools for later use in color cycling

import itertools

# Import JAX's version of NumPy and alias it as jnp

# Define a function to convert tokens to strings

def tokens2string(tokens):

# Initialize a list to hold the token strings

token_string = []

# Convert each token ID to a string using the tokenizer

for token_id in tokens:

# Convert the token ID to a numpy array and get the actual integer ID

token_id_numpy = jnp.array(token_id).item()

# Detokenize the single token ID to get the raw string

raw_string = tokenizer.detokenize([token_id_numpy])

# Convert the raw string to bytes

byte_string = raw_string.numpy()

# Decode the byte string to UTF-8 to get the decoded string

decoded_string = byte_string.decode('utf-8')

# Append the decoded string to the token_string list

token_string.append(decoded_string)

# Return the list of token strings

return token_string

# Color definitions using ANSI 24-bit color codes

colors = {

"pale_purple": "\033[48;2;202;191;234m",

"light_green": "\033[48;2;199;236;201m",

"pale_orange": "\033[48;2;241;218;176m",

"soft_pink": "\033[48;2;234;177;178m",

"light_blue": "\033[48;2;176;218;240m",

"reset": "\033[0m"

}

# Function to apply sequential colors to tokens and print them

def print_colored_tokens(tokens):

# Convert tokens to strings

token_strings = tokens2string(tokens)

# Count the number of tokens

token_count = len(token_strings)

# Count the total number of characters across all tokens

char_count = sum(len(token) for token in token_strings)

# Print the Gemma Tokenizer info and counts

print(f"Tokens: {token_count} | Characters: {char_count}")

# Create a cycle iterator for colors to apply them sequentially to tokens

color_cycle = itertools.cycle(colors.values())

for token in token_strings:

color = next(color_cycle) # Get the next color from the cycle

if color == colors['reset']: # Skip the reset color to avoid resetting prematurely

color = next(color_cycle)

# Print each token with the selected color and reset formatting at the end

print(f"{color}{token}{colors['reset']}", end="")

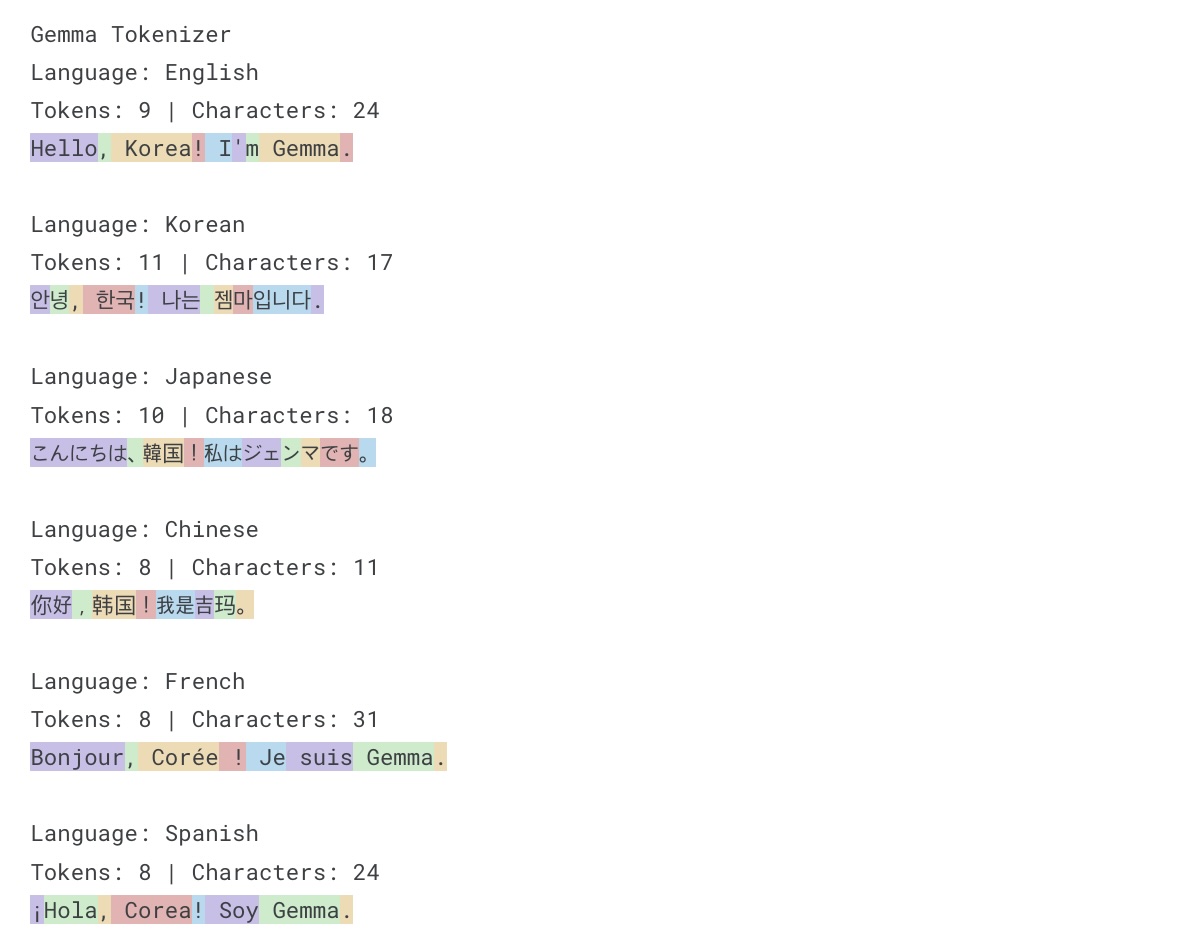

Testing Across Multiple Languages

To demonstrate the Gemma Tokenizer’s versatility, we provide examples in English, Korean, Japanese, Chinese, French, and Spanish. This showcases its ability to handle a wide range of languages effectively.

test_sentences = {

"English": "Hello, Korea! I'm Gemma.",

"Korean": "안녕, 한국! 나는 젬마입니다.",

"Japanese": "こんにちは、韓国!私はジェンマです。",

"Chinese": "你好,韩国!我是吉玛。",

"French": "Bonjour, Corée ! Je suis Gemma.",

"Spanish": "¡Hola, Corea! Soy Gemma."

}

print("Gemma Tokenizer")

for language, sentence in test_sentences.items():

print(f"Language: {language}")

tokens = tokenizer(sentence)

print_colored_tokens(tokens)

print("\n")

책 소개

[추천사]

- 하용호님, 카카오 데이터사이언티스트 - 뜬구름같은 딥러닝 이론을 블록이라는 손에 잡히는 실체로 만져가며 알 수 있게 하고, 구현의 어려움은 케라스라는 시를 읽듯이 읽어내려 갈 수 있는 라이브러리로 풀어준다.

- 이부일님, (주)인사아트마이닝 대표 - 여행에서도 좋은 가이드가 있으면 여행지에 대한 깊은 이해로 여행이 풍성해지듯이 이 책은 딥러닝이라는 분야를 여행할 사람들에 가장 훌륭한 가이드가 되리라고 자부할 수 있다. 이 책을 통하여 딥러닝에 대해 보지 못했던 것들이 보이고, 듣지 못했던 것들이 들리고, 말하지 못했던 것들이 말해지는 경험을 하게 될 것이다.

- 이활석님, 네이버 클로바팀 - 레고 블럭에 비유하여 누구나 이해할 수 있게 쉽게 설명해 놓은 이 책은 딥러닝의 입문 도서로서 제 역할을 다 하리라 믿습니다.

- 김진중님, 야놀자 Head of STL - 복잡했던 머릿속이 맑고 깨끗해지는 효과가 있습니다.

- 이태영님, 신한은행 디지털 전략부 AI LAB - 기존의 텐서플로우를 활용했던 분들에게 바라볼 수 있는 관점의 전환점을 줄 수 있는 Mild Stone과 같은 책이다.

- 전태균님, 쎄트렉아이 - 케라스의 특징인 단순함, 확장성, 재사용성을 눈으로 쉽게 보여주기 위해 친절하게 정리된 내용이라 생각합니다.

- 유재준님, 카이스트 - 바로 적용해보고 싶지만 어디부터 시작할지 모를 때 최선의 선택입니다.