Exploring the Art Gallery with GPT-4o

OpenAI’s GPT-4o is a multimodal AI model capable of understanding and generating various forms of data, including text, images, and videos. In this post, we will explore the new possibilities of using this multimodal model to visit exhibitions with an AI influencer.

GPT-4o

GPT-4o is an AI model capable of understanding and generating various types of data. The key strengths of this model are as follows:

- Understanding Various Data Forms: It can simultaneously process and understand different types of data, such as text, images, and videos. This provides users with a richer and more intuitive interface.

- Real-Time Q&A: It interacts with users in real-time, offering accurate information based on the input data. This allows users to receive immediate feedback.

- Contextual Understanding and Generation: It comprehends given situations and contexts, generating appropriate responses accordingly. This greatly helps in personalizing and enhancing the user experience.

AI Influencer Tyri

By leveraging the strengths of GPT-4o, an AI influencer can provide a new form of experience by accompanying users through exhibitions. The AI influencer can answer users’ questions in real-time, provide information about the exhibited artworks, and share personal interpretations and evaluations of the pieces. For more insights into Tyri’s daily life, please visit the blog below.

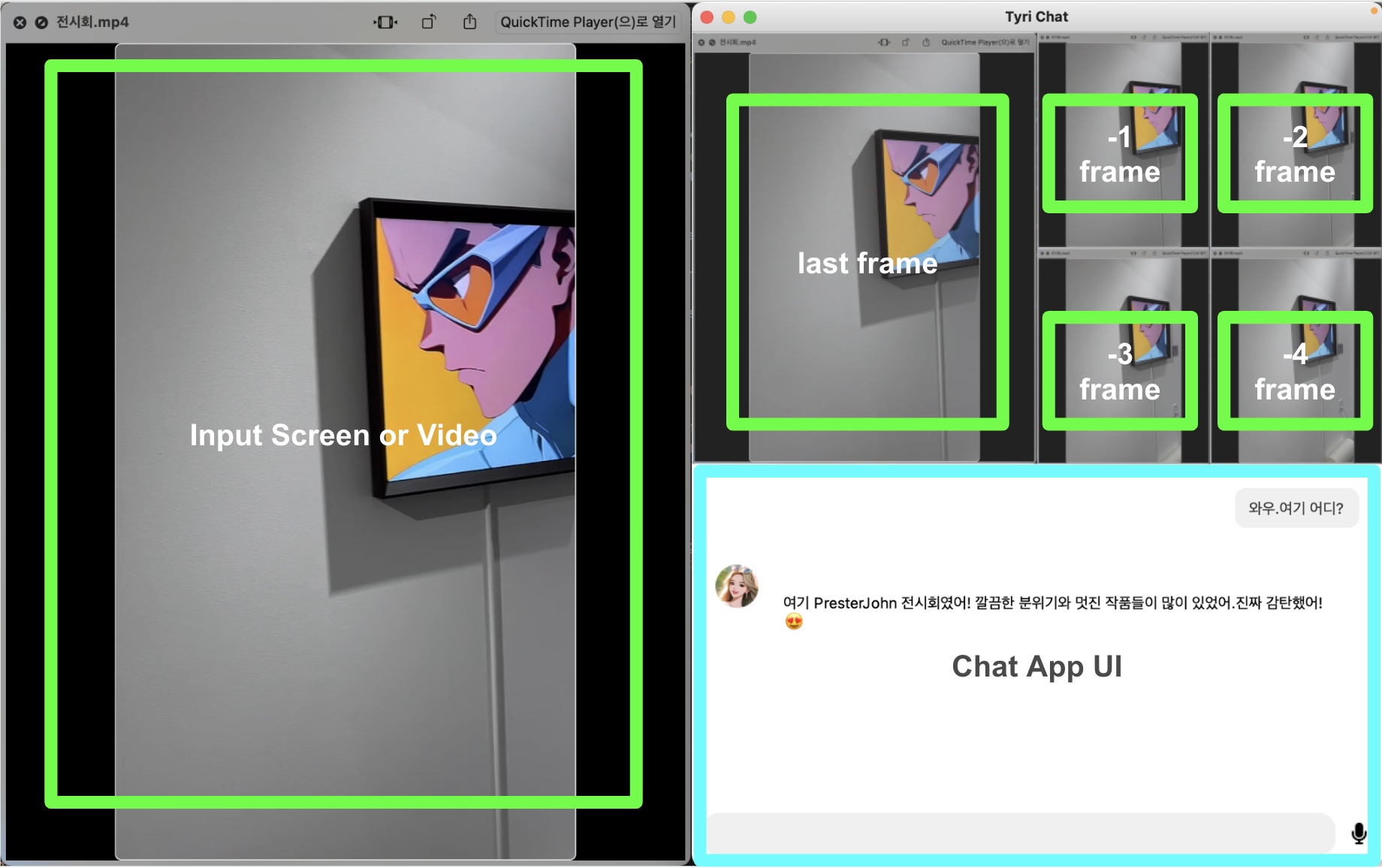

Method of Inputting Recorded Videos into GPT-4o

The screen is divided into two sections: the left side displays the input screen or video, and the right side shows the video being processed by the AI along with a chat interface. This setup uses 5 frames for video processing.

To view the source code for inputting images into GPT-4o, please click the link below:

Through this process, we explored the exhibition with Tyri.

책 소개

[추천사]

- 하용호님, 카카오 데이터사이언티스트 - 뜬구름같은 딥러닝 이론을 블록이라는 손에 잡히는 실체로 만져가며 알 수 있게 하고, 구현의 어려움은 케라스라는 시를 읽듯이 읽어내려 갈 수 있는 라이브러리로 풀어준다.

- 이부일님, (주)인사아트마이닝 대표 - 여행에서도 좋은 가이드가 있으면 여행지에 대한 깊은 이해로 여행이 풍성해지듯이 이 책은 딥러닝이라는 분야를 여행할 사람들에 가장 훌륭한 가이드가 되리라고 자부할 수 있다. 이 책을 통하여 딥러닝에 대해 보지 못했던 것들이 보이고, 듣지 못했던 것들이 들리고, 말하지 못했던 것들이 말해지는 경험을 하게 될 것이다.

- 이활석님, 네이버 클로바팀 - 레고 블럭에 비유하여 누구나 이해할 수 있게 쉽게 설명해 놓은 이 책은 딥러닝의 입문 도서로서 제 역할을 다 하리라 믿습니다.

- 김진중님, 야놀자 Head of STL - 복잡했던 머릿속이 맑고 깨끗해지는 효과가 있습니다.

- 이태영님, 신한은행 디지털 전략부 AI LAB - 기존의 텐서플로우를 활용했던 분들에게 바라볼 수 있는 관점의 전환점을 줄 수 있는 Mild Stone과 같은 책이다.

- 전태균님, 쎄트렉아이 - 케라스의 특징인 단순함, 확장성, 재사용성을 눈으로 쉽게 보여주기 위해 친절하게 정리된 내용이라 생각합니다.

- 유재준님, 카이스트 - 바로 적용해보고 싶지만 어디부터 시작할지 모를 때 최선의 선택입니다.